We are happy to announce that our work “TATL: Task Agnostic Transfer Learning for Skin Attributes Detection” has been accepted at the prestigious journal “Medical Image Analysis”. It’s a collaboration between DFKI, MPI, University of California (Berkeley) and Oldenburg University among others.

Existing skin attributes detection methods usually initialize with a pre-trained Imagenet network and then fine-tune on a medical target task. However, we argue that such approaches are suboptimal because medical datasets are largely different from ImageNet and often contain limited training samples.

In this work, we propose Task Agnostic Transfer Learning (TATL), a novel framework motivated by dermatologists’ behaviors in the skincare context. Our method learns an attribute-agnostic segmenter that detects lesion skin regions and then transfers this knowledge to a set of attribute-specific classifiers to detect each particular attribute. Since TATL’s attribute-agnostic segmenter only detects skin attribute regions, it makes use of ample data from all attributes, allows transferring knowledge among features, and compensates for the lack of training data from rare attributes. The empirical results show that TATL not only works well with multiple architectures but also can achieve state-of-the-art performances while enjoying minimal model and computational complexities (30-50 times less than the number of parameters). We also provide theoretical insights and explanations for why our transfer learning framework performs well in practice.

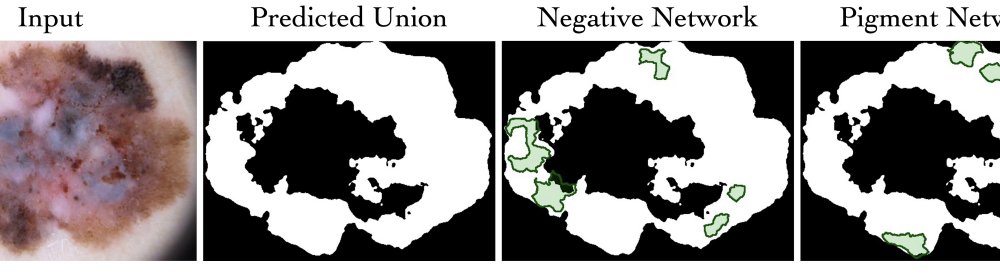

The figure below demonstrates the usefulness of TATL when predicted lesion skin regions (predicted union) could cover both large regions as in Pigment Network and small disconnected regions as in Negative Network.

Projects: pAItient (BMG), Ophthalmo-AI (BMBF)