Funding period 04/2017 – 06/2020

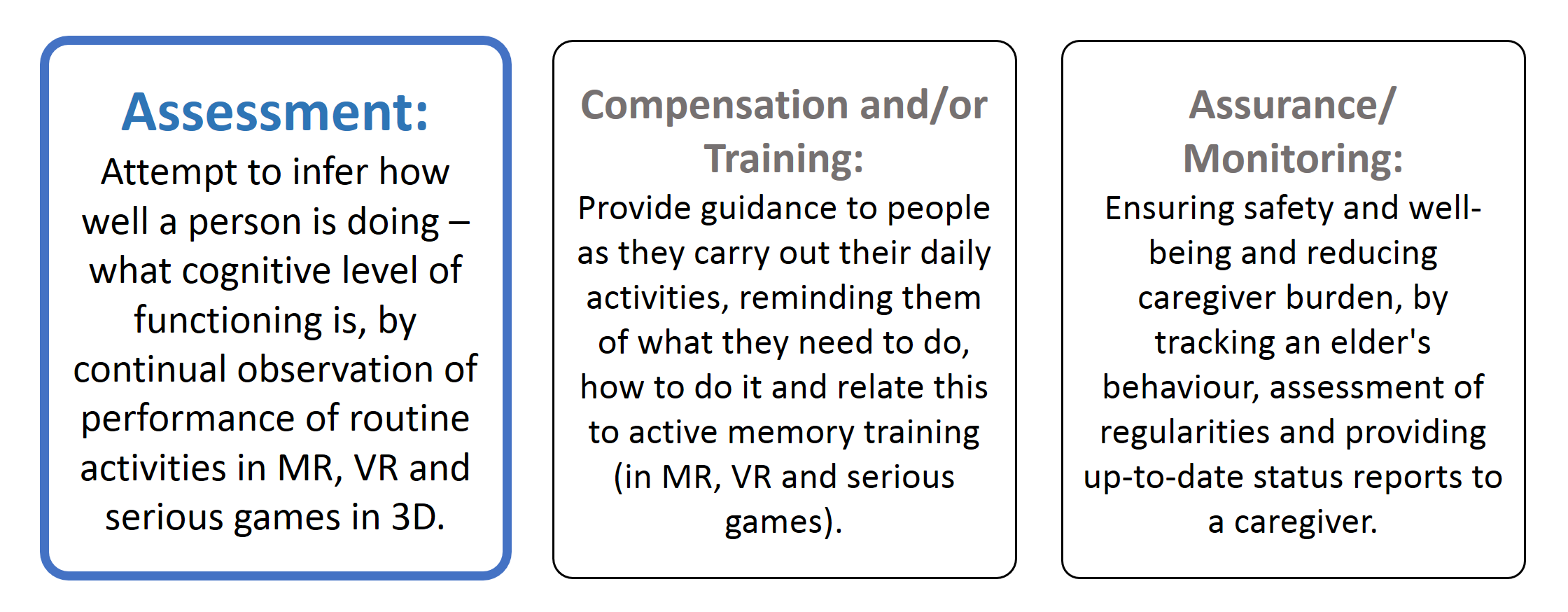

The goal of the Interakt (interactive cognitive assessment tool) project is to improve the diagnostic process of dementia and other forms of cognitive impairments by digitising and digitalising standardised cognitive assessments. We aim at everyday procedures in hospitals (day clinics) and at home.

Interakt (2017-2020) is a BMBF project based on Kognit.

Research Questions

To identify interface design principles that most effectively support automatic digital assessments by multisensory monitoring and automatic multimodal feedback.

At the computational level, it will be important to investigate approaches to designing systems that recognize and model patients’ cognitive and affective states.

At the interface level, it will be important to devise design principles that can inform the development of innovative multimodal-multisensor interfaces for a variety of patient populations, test contexts, and learning environments.

Objectives

Most cognitive assessments used in medicine today are paper-pencil based. These tests are both expensive and time consuming. (A doctor, physiotherapist or psychologist conducts the assessments.) In addition, the results can be biased.

Through the use of smartpen technologies and mobile devices, multimodal multisensory data (e.g., speech and handwriting) are collected and evaluated.

We address the issue of what role automation could play in designing adaptive multimodal-multisensor technologies to support precise assessments; an online test environment will be developed.

Publications

- Daniel Sonntag. Interakt – A Multimodal Multisensory Interactive Cognitive Assessment Tool, 2017

- Daniel Sonntag: Interactive Cognitive Assessment Tools: A Case Study on Digital Pens for the Clinical Assessment of Dementia, 2018

- Alexander Prange, Michael Barz, Daniel Sonntag: A categorisation and implementation of digital pen features for behaviour characterisation, 2018

- Michael Barz, Florian Daiber, Daniel Sonntag, Andreas Bulling: Error-aware gaze-based interfaces for robust mobile gaze interaction, ETRA 2018

- Mira Niemann, Alexander Prange, Daniel Sonntag: Towards a Multimodal Multisensory Cognitive Assessment Framework, CBMS 2018

- Alexander Prange, Daniel Sonntag: Modeling Cognitive Status through Automatic Scoring of a Digital Version of the Clock Drawing Test, UMAP 2019

- Alexander Prange, Mira Niemann, Antje Latendorf, Anika Steinert, Daniel Sonntag: Multimodal Speech-based Dialogue for the Mini-Mental State Examination, CHI Extended Abstracts 2019

People

Daniel Sonntag (DFKI, supervisor)

Alexander Prange (DFKI, PhD student)

Michael Barz (DFKI, PhD student)

Jan Zacharias (DFKI, Software Engineer)

Andreas Luxenburger (DFKI, PhD student)

Mehdi Moniri (DFKI, adjunct collaborator, PhD student)

Vahid Rahmaniv (DFKI, GPU infrastructure)

Aditya Gulati (DFKI, cognitive load)

Sven Stauden (DFKI, object classification)

Philip Hell (DFKI, digital pen)

Nikolaj Woroschilow (DFKI, interaction design)

Mira Niemann (DFKI, speech assistants)

Carolin Grieser (DFKI, digital pen)

Partners

DFKI

Charité

Synaptikon (Neuronation)

Doc Cirrus

EITCO

Sponsored by