Medical Foundation Models: From Vision to Multimodal and Trustworthy AI

Funding period 2024-2027

Contact: Duy Nguyen

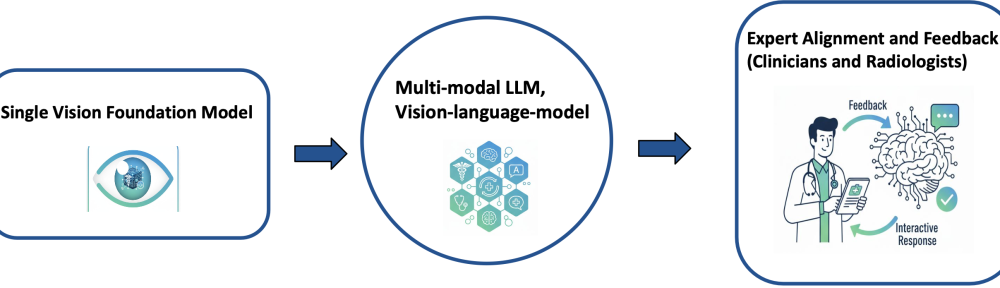

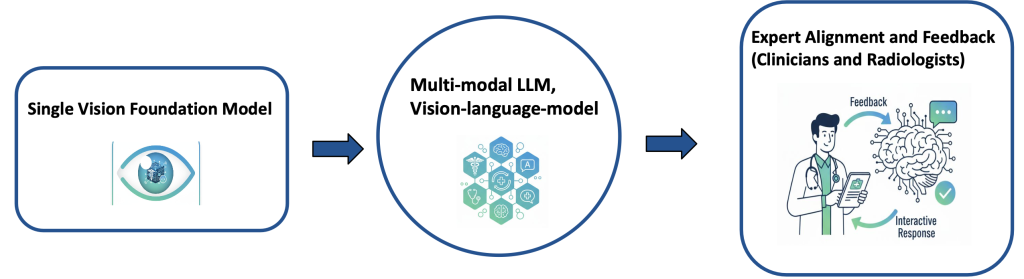

Our research develops large-scale foundation models for medical imaging and multi-modal reasoning. These models are designed to work in collaboration with human experts, enhancing reliability, interpretability, and generalization across medical domains.

Research Results

Our group develops large medical foundation models that integrate vision, language, and structured reasoning to advance understanding across medical imaging and clinical data. We focus on:

- Single- to Multi-Modal Learning: evolving from vision-only models to unified models that understand and generate medical knowledge across text, image, and clinical data.

- Transparent and Structured Reasoning: enabling models to explain their predictions through interpretable, step-by-step reasoning processes.

- Human–AI Collaboration: designing systems that learn from clinician feedback and align with expert judgment.

- Continual Self-Improvement: incorporating human feedback and new data to refine model understanding and reliability over time, working with low-resource data settings.

Partners

We collaborate with leading institutions and hospital partners to advance trustworthy and generalizable AI for healthcare.

Selected Publications

Sonntag, Daniel, Michael Barz, Thiago Gouvea: “A look under the hood of the Interactive Deep Learning Enterprise (No-IDLE).” DFKI Technical Report, 2024.

MH Nguyen, Duy, Hoang Nguyen, Nghiem Diep, Tan Ngoc Pham, Tri Cao, Binh Nguyen, Paul Swoboda et al. “Lvm-med: Learning large-scale self-supervised vision models for medical imaging via second-order graph matching.” Advances in Neural Information Processing Systems (NeurIPS) 2023.

Nguyen, Duy Minh Ho, Nghiem Tuong Diep, Trung Quoc Nguyen, Hoang-Bao Le, Tai Nguyen, Anh-Tien Nguyen, TrungTin Nguyen et al. “Exgra-med: Extended context graph alignment for medical vision-language models.” In The Thirty-ninth Annual Conference on Neural Information Processing Systems (NeurIPS) 2025.

Nguyen, Anh-Tien, Duy Minh Ho Nguyen, Nghiem Tuong Diep, Trung Quoc Nguyen, Nhat Ho, Jacqueline Michelle Metsch, Miriam Cindy Maurer, Daniel Sonntag, Hanibal Bohnenberger, and Anne-Christin Hauschild. “MGPATH: Vision-Language Model with Multi-Granular Prompt Learning for Few-Shot WSI Classification.” Transactions on Machine Learning Research (TMLR), 2025.

Huy M. Le, Dat Tien Nguyen, Ngan T. T. Vo , Tuan D. Q. Nguyen, Nguyen Le Binh, Duy MH Nguyen, Daniel Sonntag, Lizi Liao , Binh T. Nguyen, “Reinforce Trustworthiness in Multimodal Emotional Support System.” Proceedings of the AAAI Conference on Artificial Intelligence (Oral), 2026.

Le-Duc, Khai*, Duy MH Nguyen*, Phuong TH Trinh, Tien-Phat Nguyen, Nghiem T. Diep, An Ngo, Tung Vu et al. “S-Chain: Structured Visual Chain-of-Thought For Medicine.” arXiv preprint arXiv:2510.22728 (2025).